Apple Acquisition of UC San Diego Startup Paves Way for Further Robotics Research at UC San Diego

Published Date

By:

- Doug Ramsey

Share This:

Article Content

The Qualcomm Institute is taking over the Machine Perception Lab and its Diego-san robot, following the departure of the lab’s top scientists following the sale of their startup Emotient to Apple.

Two years ago a team of six Ph.D. scientists at the University of California, San Diego decided to commercialize their artificial-intelligence (AI) technology for reading emotions based on facial recognition and analysis. They launched the startup, San Diego-based Emotient, Inc., which grew to more than 50 employees as of the end of 2015.

Now, the Wall Street Journal reports that Apple, Inc. has confirmed its purchase of Emotient for an undisclosed price. As part of the deal, Emotient’s three co-founders from UC San Diego – Javier Movellan, Marian Stewart Bartlett and Gwen Littlewort – agreed to leave the university to join Apple in Cupertino, Calif., along with at least four former UC San Diego students who are currently employed by Emotient. Last week was the team’s first as Apple employees.

MPLab and Emotient co-founder Javier Movellan joined Apple last week

The Emotient leadership team will also leave behind the research group they created: the Machine Perception Laboratory, now based in the Qualcomm Institute, which is the UCSD division of the California Institute for Telecommunications and Information Technology (Calit2). Movellan, Bartlett and Littlewort will also step down as researchers affiliated with the university’s Institute for Neural Computation (INC).

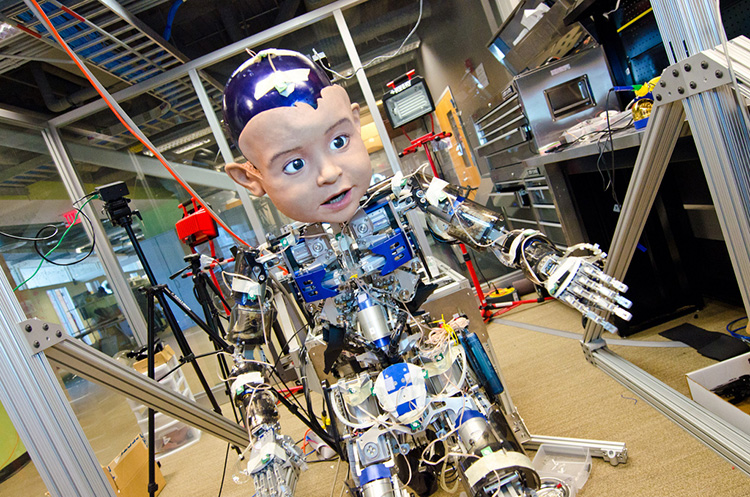

According to Qualcomm Institute Director Ramesh Rao, Movellan and his colleagues will leave behind a research lab developed over the past decade, as well as a state-of-the-art robot named Diego-san (a fully-built robot originally designed to approximate the intelligence of a one-year-old human).

“The Qualcomm Institute will take advantage of past involvement with the Machine Perception Lab and will reconfigure the facility to expand use of Diego-san research as a testbed for developing new software and hardware for more specialized robotic systems,” said QI’s Rao. “We are exploring ways to showcase the Diego-san robot while also leveraging the lab for faculty and staff researchers to develop other types of robotic systems to serve a variety of purposes and environments.”

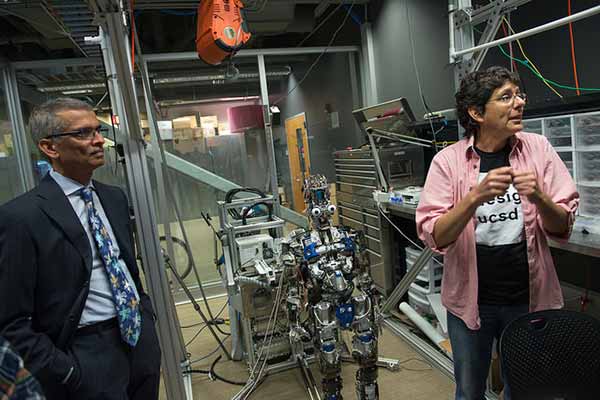

The Machine Perception Lab in the Qualcomm Institute, with QI director Ramesh Rao and lab researcher Deborah Forster.

The MPLab is best known for developing AI systems to analyze facial and body gestures. The lab, for example, developed the algorithm that became the centerpiece of Sony’s “Smile Shutter” technology, similar to features now built into many consumer digital cameras (to prevent snapping a photo if the subject is not smiling). The lab also developed several generations of RUBI, a robot designed for applications such as early childhood education (for teaching pre-schoolers to interact with, and learn from, the robot).

In 2012 Movellan, Bartlett and Littlewort set up Emotient off-campus to create a commercial leader in “emotion detection and sentiment analysis.” The company was at the “vanguard of a new wave of emotion analysis that will lead to a quantum leap in customer understanding and emotion-aware computing," according to the company’s website. "Emotient's cloud-based services deliver direct measurement of a customer's unfiltered emotional response to ads, content, products and customer service or sales interactions."

In May 2015, Emotient received a U.S. Patent on its software to crowdsource, collect and label up to 100,000 facial images daily to track expressions and what they say about a person’s emotional state. A year earlier, Emotient filed a patent application for its system to “analyze and identify people’s moods based on a variety of clues, including facial expression,” the Wall Street Journal reported. According to prior claims by the startup, its Emotient Analytics system delivered over 95 percent accuracy in detecting primary emotions based on single video frames in real-world as well as controlled conditions.

The startup’s technology has already helped advertisers assess how viewers are reacting to advertisements in real time. Physicians have used Emotient software to interpret pain levels in patients who otherwise have difficulty expressing what they’re feeling, while a retailer has employed the company’s AI technology to monitor consumers’ reactions to products on store shelves.

Apple has made no public comment about its buyout of Emotient, nor about how it intends to use the startup’s technology. Time magazine, however, suggested that “camera software that can read subtle facial movements could allow for a more advanced photo library on the iPhone,” perhaps through a combination of features offered by Emotient and improved search capabilities that Apple added to its Siri system last September.

Emotient is one of several Apple acquisitions of AI-related small companies in the past six months. The others include: Perceptio for deep-learning image recognition on mobile processors; and VocalIQ, whose technology can enhance a computer’s ability to decipher natural speech.

Share This:

You May Also Like

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.