Computer scientists combine computer vision and brain computer interface for faster mine detection

Published Date

By:

- Ioana Patringenaru

Share This:

Article Content

Computer scientists at the University of California, San Diego, have combined sophisticated computer vision algorithms and a brain-computer interface to find mines in sonar images of the ocean floor. The study shows that the new method speeds detection up considerably, when compared to existing methods—mainly visual inspection by a mine detection expert.

“Computer vision and human vision each have their specific strengths, which combine to work well together,” said Ryan Kastner, a professor of computer science at the Jacobs School of Engineering at UC San Diego. “For instance, computers are very good at finding subtle, but mathematically precise patterns while people have the ability to reason about things in a more holistic manner, to see the big picture. We show here that there is great potential to combine these approaches to improve performance.”

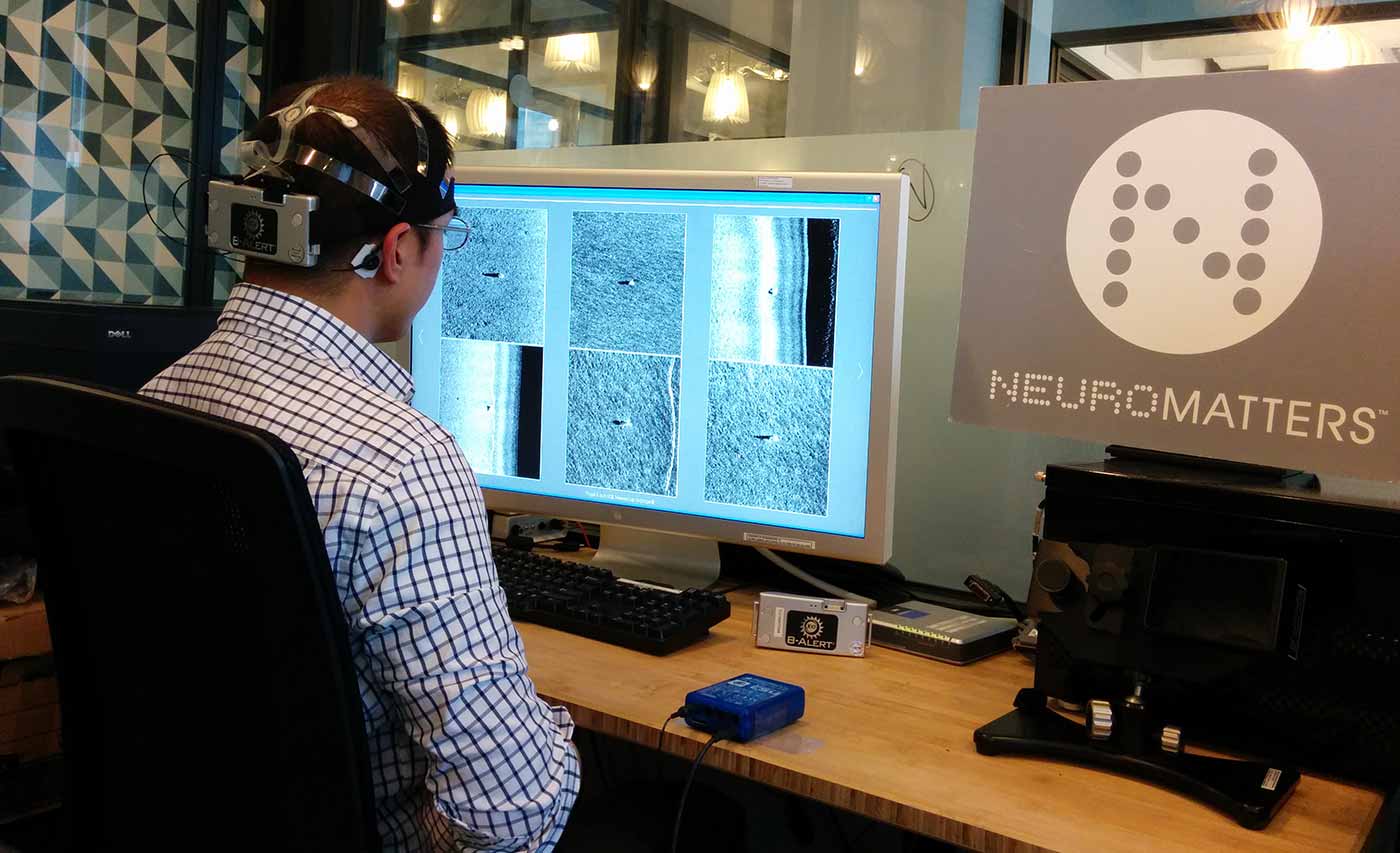

A Neuromatters employee demonstrates how subjects in the mine detection study did their screening. Photo Neuromatters

Researchers worked with the U.S. Navy’s Space and Naval Warfare Systems Center Pacific (SSC Pacific) in San Diego to collect a dataset of 450 sonar images containing 150 inert, bright-orange mines placed in test fields in San Diego Bay. An image dataset was collected with an underwater vehicle equipped with sonar. In addition, researchers trained their computer vision algorithms on a data set of 975 images of mine-like objects.

In the study, researchers first showed six subjects a complete dataset, before it had been screened by computer vision algorithms. Then they ran the image dataset through mine-detection computer vision algorithms they developed, which flagged images that most likely included mines. They then showed the results to subjects outfitted with an electroencephalogram (EEG) system, programmed to detect brain activity that showed subjects reacted to an image because it contained a salient feature—likely a mine. Subjects detected mines much faster when the images had already been processed by the algorithms. Computer scientists published their results recently in the IEEE Journal of Oceanic Engineering.

The algorithms are what’s known as a series of classifiers, working in succession to improve speed and accuracy. The classifiers are designed to capture changes in pixel intensity between neighboring regions of an image. The system’s goal is to detect 99.5 percent of true positives and only generate 50 percent of false positives during each pass through a classifier. As a result, true positives remain high, while false positives decrease with each pass.

Researchers took several versions of the dataset generated by the classifier and ran it by six subjects outfitted with the EEG gear, which had been first calibrated for each subject. It turns out that subjects performed best on the data set containing the most conservative results generated by the computer vision algorithms. They sifted through a total of 3,400 image chips sized at 100 by 50 pixels. Each chip was shown to the subject for only 1/5 of a second (0.2 seconds) —just enough for the EEG-related algorithms to determine whether subject’s brain signals showed that they saw anything of interest.

All subjects performed better than when shown the full set of images without the benefit of prescreening by computer vision algorithms. Some subjects also performed better than the computer vision algorithms on their own.

“Human perception can do things that we can’t come close to doing with computer vision,” said Chris Barngrover, who earned a computer science Ph.D. in Kastner’s research group and is currently working at SSC Pacific. “But computer vision doesn’t get tired or stressed. So it seemed natural for us to combine the two.”

In addition to Barngrover and Kastner, co-authors on the paper include Paul DeGuzman, a program manager at Neuromatters LLC, and Alric Althoff, a Ph.D. student in computer science at the Jacobs School of Engineering at UC San Diego. Neuromatters is a pioneer in brain-computer interface technologies with their C3Vision™ system, which was adapted for use in this project. The researchers also would like to thank Advanced Brain Monitoring, a medical devices company, for the use of the company’s EEG headset.

Share This:

You May Also Like

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.