Acting Student and Bioengineering Alumna Awarded Soros Fellowship for New Americans

Awards & Accolades

By:

Published Date

By:

Share This:

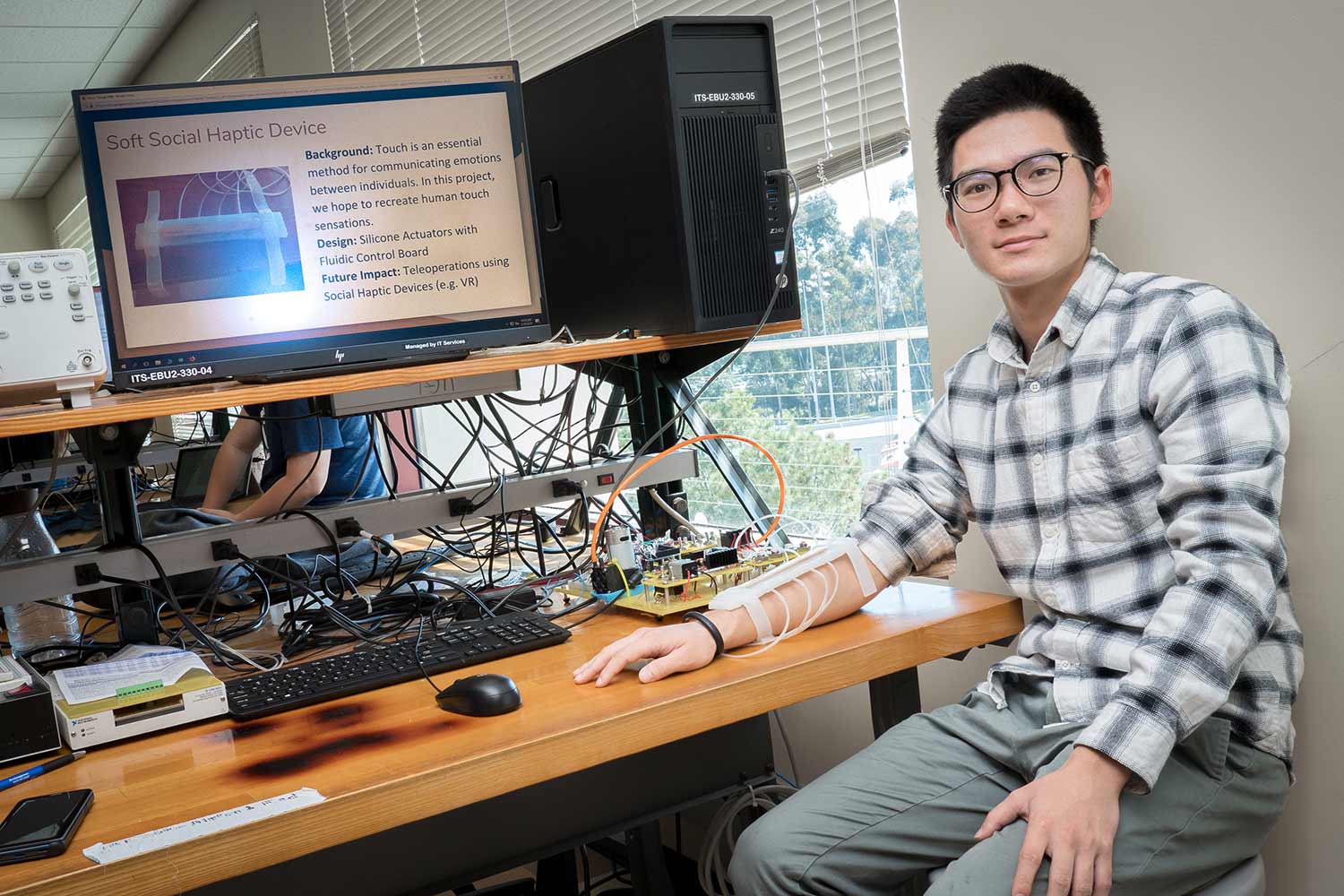

Jui-Te Lin, a master's student, with the device he built for UC San Diego's first haptic interfaces class.

A tool to help the visually impaired navigate crowded spaces; an interface to assist surgeons during a complex procedure; and a display that can change shape when heat is applied. These were all projects developed by students in the first-ever haptic interfaces class offered at the Jacobs School of Engineering at UC San Diego.

Haptics refers to interactions with the sense of touch, including force (for example pushing a button), texture, motion and vibration. Haptic interfaces can be designed to reproduce these interactions and enable users to feel virtual or remote environments by applying forces or by stimulating the skin. Haptic feedback can be used in robotic surgery, for example, as well as in everyday devices, including smartphones that tap back or vibrate when the flashlight turns on, for example.

Two students developed a harness to help blind people navigate crowded spaces.

The class, aptly named Haptic Interfaces, was taught by Tania Morimoto, a professor in the Department of Mechanical and Aerospace Engineering. Her research focuses on the design and control of flexible and soft robots for unstructured, unknown environments. Her goal is to develop safer, more dexterous robots and human-in-the-loop interfaces, especially for medical applications such as surgeries. Morimoto is also part of the Contextual Robotics Institute at UC San Diego.

“I wanted the class to be hands-on to teach students how to design, build and program a haptic system,” Morimoto said. “I wanted them to get a solid foundation on the field of haptics and how they can provide touch feedback to users interacting with virtual environments and teleoperated robots.”

The students, most of whom are working toward a master’s or doctoral degree, got to show what they had learned by putting together a final project.

Matthew Kohanfars and Dylan Steiner developed an interface to help guide surgeons during a procedure that corrects irregular heart rhythms. The surgery, called atrial fibrillation ablation, consists of threading catheters into the heart and applying heat to destroy certain parts of the heart muscle that cause erratic electrical signals in the heart.

One potential complication of the surgery is the esophagus being fused with an artery. The students’ interface is designed to help surgeons avoid that. It is worn on the forearm and connected to a temperature probe inside the patient’s esophagus. The interface’s main control system would use temperature readings and the position of the surgeon’s tools to prevent them from damaging the esophagus wall by guiding them away from the area. The interface guides the surgeon by applying vibration cues to the forearm in the direction that the surgeon’s tools need to move (currently this is done with sounds). The interface also notifies the user if the temperature at the surgical site has increased by 1 degree Celsius, so the surgery can be stopped so that the heat does not damage the tissue in the esophagus.

Ph.D. student Andi Frank helps teaching assistant Saurabh Jadhav try on the haptic harness she and fellow student Aamodh Suresh developed.

Bringing the hardware and programming together was the hardest part of the project, the two students said. “We finished at 4:30 a.m. today,” Kohanfars added.

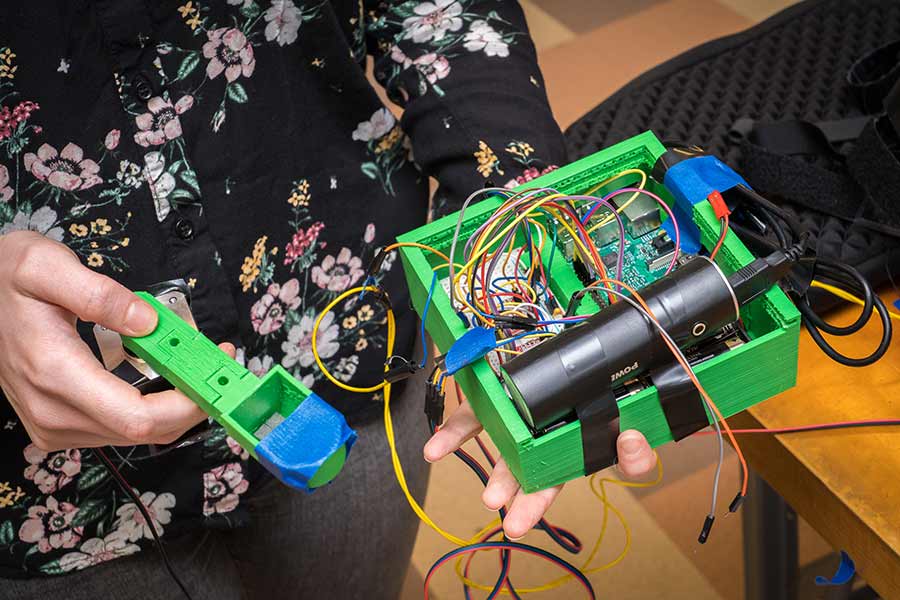

Andi Frank and Aamodh Suresh built a tool to help the visually impaired navigate in crowded spaces, where using a cane can be difficult. The goal was to mimic the sensation of having a hand resting on your shoulder and directing you to turn left or right, stop or go. Frank and Suresh accomplished this by building a harness with two small 3D-printed appendages—one for each shoulder. The harness is also equipped with tiny vibrating motors, and is connected to a circuit board and power source. Right now, the device is wirelessly remote controlled.

During a demo, Morimoto donned the harness and closed her eyes as Frank and Suresh remotely guided her around lab benches and chairs. The students said they struggled with replicating the exact force the device’s “guiding hands” needed to apply. To simplify the system, they decided to use vibration to signify stop and go.

For her research as part of the Healthcare Robotics Lab led by Professor Laurel Riek, Frank focuses on the different ways robots can interact more naturally with humans. Haptics seems like a promising avenue for communication between the robot and the human in a way that isn't disruptive to others like sound can be, or doesn’t require direct line of sight like a screen display, she said.

“I feel I've gained a greater appreciation for how the sense of touch can be used as a mode of communication between humans and technology,” Frank said. “We don't really think about how much we rely on touch to perceive our environment until we try to make virtual environments that seem so weird without touch.”

Share This:

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.