SDSC’s Gordon: A Non-Conventional Supercomputer Fosters Non-Traditional Research Projects

By:

- Jan Zverina

Published Date

By:

- Jan Zverina

Share This:

Article Content

When the San Diego Supercomputer Center (SDSC) at the University of San Diego, California, debuted Gordon early last year, the system’s architects envisioned that its innovative features – such as the first large-scale deployment of flash storage (300 terabytes) in a high-performance computer – would open the door to new areas of research.

“Gordon's extraordinary speed makes it possible for researchers to tackle questions they couldn't address before, simply because they didn’t have a system that was uniquely tailored to handle the challenges of data intensive computing,” said SDSC Michael Norman just prior to Gordon’s launch. “I view Gordon as a new kind of vessel, a ship that will take us on new voyages to makes new discoveries in new areas of science.”

Related Story

This story is the first in a series highlighting projects using the San Diego Supercomputer Center’s newest HPC resource. Read part two here.

Fast forward to the first half of 2013, and Gordon has already been used in about 300 research projects, ranging from domains that traditionally use high-performance computing (HPC) resources such as chemistry, engineering, physics, astronomy, molecular biology, and climate change. Lately, scientists have been turning to Gordon to assist them in areas that are relatively new to advanced computation, such as political science, mathematical anthropology, finance, and even the cinematic arts.

“We have made it a priority to reach out to data-intensive communities that could further their research by taking advantage of Gordon’s unique capacity to rapidly process huge amounts of data or many multiples of smaller data sets that have become commonplace across the sciences,” said Norman, principal investigator for the five year, $20 million supercomputer project funded by National Science Foundation (NSF).

Gordon, named for its extensive use of flash-based memory common in smaller devices such as cellphones or laptop computers, can process data-intensive problems 10 times or more quickly than conventional supercomputers using spinning disks. Available for use by industry and government agencies, Gordon is part of the NSF’s XSEDE (eXtreme Science and Engineering Discovery Environment) program, a nationwide partnership comprising 16 supercomputers as well as high-end visualization and data analysis resources. Full details on Gordon can be found at http://www.sdsc.edu/supercomputing/gordon/.

Following are three examples of what could be considered “non-traditional” computational research assisted by Gordon’s unique features.

Large Scale Video Analytics

Virginia Kuhn, associate director of the Institute for Multimedia Literacy and associate professor in the School of Cinematic Arts at the University of Southern California (USC), has been using Gordon to search, index, and tag vast media archives in real time, applying a hybrid process of machine analytics and crowd-sourced tagging.

The Large Scale Video Analytics (LSVA) project is a collaboration among cinema scholars, digital humanists, and computational scientists from the IML (Institute for Multimedia Literacy); ICHASS (Institute for Computing in the Humanities, Arts and Social Sciences); and XSEDE. The project is customizing the Medici content management system to apply various algorithms for image recognition and visualization into workflows that will allow real‐time analysis of video.

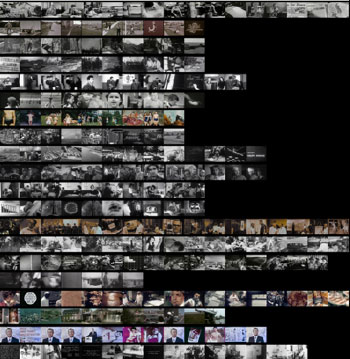

Comparative visualization reveals similarities and differences between multiple films within an archive. Image by Virginia Kuhn, USC

“Contemporary culture is awash in moving images,” said Kuhn, principal investigator for the research project. “There is more video uploaded to YouTube in a day than a single person can ever view in a lifetime. As such, one must ask what the implications are when it comes to the issues of identity, memory, history, or politics.”

The LVSA project turned to Gordon for its extensive and easily accessed storage capacity, since video data collections can easily reach multiple terabytes in size. Work was initially performed using a dedicated Gordon I/O node, and later expanded to also include four dedicated compute nodes. “Persistent access to the flash storage in the I/O nodes has been critical for minimizing data access times, while allowing interactive analysis that was so important to this project,” said Bob Sinkovits, Gordon applications lead at SDSC.

The system enabled data subsets that were most heavily used to reside in areas that provided fast random access.

Predictive Analytics Using Distributed Computing

For operations on large data sets, the amount of time spent moving data between levels of a computer’s storage and memory hierarchy often dwarfs the computation time. In such cases, it can be more efficient to move the software to the data rather than the traditional approach of moving data to the software.

Distributed computing frameworks such as Hadoop take advantage of this new paradigm. Data sets are divided into chunks that are then stored across the Hadoop cluster’s data nodes using the Hadoop Distributed File System (HDFS), and the MapReduce engine is used to enable each worker node to process its own portion of the data set before the final results are aggregated by the master node. Applications are generally easier to develop in the MapReduce model, avoiding the need to directly manage parallelism using MPI, Pthreads, or other fairly low-‐level approaches.

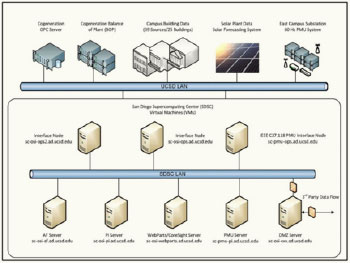

This collection, spanning 17,000 inputs from the network simulation model and 10,000 additional measurements from electric power meters, was analyzed on SDSC’s Gordon Hadoop cluster. Image by David Zeglinsky, OSISoft

Yoav Freund, a computer science and engineering professor at UC San Diego, specializes in machine learning, a relatively new field of research which bridges computer science and statistics. A recognized authority in big data analytics, Freund recently taught a graduate-level class in which students used a dedicated Hadoop cluster on Gordon to analyze data sets ranging in size from hundreds of megabytes to several terabytes. While student projects were diverse – analysis of temperature and airflow sensors on the UC San Diego campus, detection of failures in the Internet through the analysis of the communication between BGP (Border Gateway Protocol) nodes, and predicting medical costs associated with vehicle accidents – they had one need in common: the need for rapid turnaround of data analysis.

“Given the large data movement requirements and the need for very rapid turnaround, it would not have been feasible for the students to work through a standard batch queue,” said Freund. “Having access to flash storage greatly reduced the time for random data access.”

A Gordon I/O node and the corresponding 16 compute nodes were configured as a dedicated Hadoop cluster, with the HDFS mounted on the solid state drives (SSDs). To enable experimentation with Hadoop, SDSC also deployed MyHadoop, which allows users to temporarily create Hadoop instances through the regular batch scheduler.

Modeling Human Societies

Doug White, a professor of anthropology at UC Irvine, is interested in how societies, cultures, social roles, and historical agents of change evolve dynamically out of multiple networks of social action. These networks can be understood using graph theory, where individuals are represented as vertices and the relationships between them are represented using edges.

In the 1990s, White and his colleagues discovered that networks could be meaningfully analyzed by a new algorithm that derives from one of the basic theorems in graph theory: the Menger vertex-connectivity theorem announced in 1927.

Computer scientists had previously coded Menger's edge-connectivity theorem, known as the Max-Flow (Ford-Fulkerson) network algorithm, to optimize the routing of traffic. But Menger's theorem also proved that vertex-connectivity, defined as the number of independent paths between a pair of vertices that share no vertices (other than the end points) in common, is equivalent to the number of vertices that need to be removed from the graph so that there is no longer a path between the two vertices.

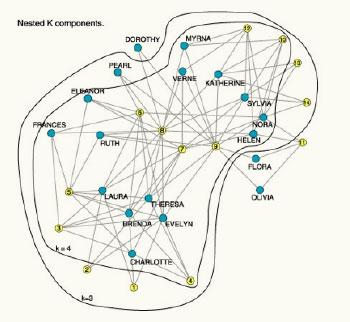

K‐cohesive levels of "southern women" neighborhood parties. K varies from 1 to 4 (connected to 4-connectivity). Image from James Moody’s and Douglas R. White’s ‘Structural Cohesion and Embeddedness: A Hierarchical Conception of Social Groups’. American Sociological Review 68(1):1-25. Outstanding Article Award, Mathematical Sociology, 2004

Using Menger’s theorem, it is possible to identify cohesive groups in which all members remain joined by paths as vertices are removed from the network. Finding the boundaries of cohesive subgroups, however, remain a challenge for computation, far more challenging than finding cliques of fully connected sets of vertices within a graph. White and his team are currently testing their recently developed software on synthetic networks constructed from well-known models, and plan to apply their methods to data sets derived from networks of co-authorship, benefits of marriage alliances, and splits in the scientific citation networks in periods of contention.

“The most computationally difficult step in the team's design of new high-performance software requires calculating the number of vertex-independent paths between all pairs of vertices in the network,” said White.

SDSC computational scientist Robert Sinkovits addressed scalability issues in the parallel algorithm and ported the application to Gordon’s vSMP nodes following guidelines provided by ScaleMP. For larger graphs, such as a 2400 vertex network built using the Watts-Strogatz small-world model, near linear scalability was achieved when using all 256 cores (an estimated 243x speedup relative to one core).

“We’re coordinating teams at UC Irvine, UC San Diego, the Santa Fe Institute, and Argonne National Laboratory so that after 130 years, we finally put comparative research on a solid footing of replicable scientific findings that are comparable from small to large and super-large datasets,” said White.

Please click on this link for more on White’s research.

Share This:

You May Also Like

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.