Computer Scientists Develop an Interactive Field Guide App for Birders

Ultimate goal is better image searches on the web

By:

- Ioana Patringenaru

Published Date

By:

- Ioana Patringenaru

Share This:

Article Content

Computer Science Professor Serge Belongie is the lead researcher on the Visipedia app.

A team of researchers led by computer scientist Serge Belongie at the University of California, San Diego, has good news for birders: they have developed an iPad app that will identify most North American birds, with a little help from a human user.

The app is essentially an interactive field guide, where computer vision algorithms analyze the picture the user submitted, ask questions and call up pages with pictures and information about a bird species that is a likely match. The researchers’ ultimate goal is to fill a gap in the world of online search. Text-driven search, such as Google and Wikipedia, has been wildly successful. But identifying images has so far proven much more difficult.

That process involves uploading a picture to a search tool, such as Google Goggles, and asking what it is. This works well with well-known landmarks and pictures. But it mostly fails with lesser-known visuals, such as images of animals and plants.

Computer scientists at the Jacobs School of Engineering at UC San Diego, UC Berkeley and Caltech have designed a search system that can interact with users to provide more accurate results. For example, when the system is stumped, users can label parts of an image, such as a bird’s head, chest and tail and provide information about other characteristics, such as the color of the bird’s coat.

“We chose birds for several reasons: there is an abundance of excellent photos of birds available on the Internet, the diversity in appearance across different bird species presents a deep technical challenge and, perhaps most importantly, there is a large community of passionate birders who can put our system to the test,” explained Belongie, the Jacobs School of Engineering computer scientist at the heart of the project.

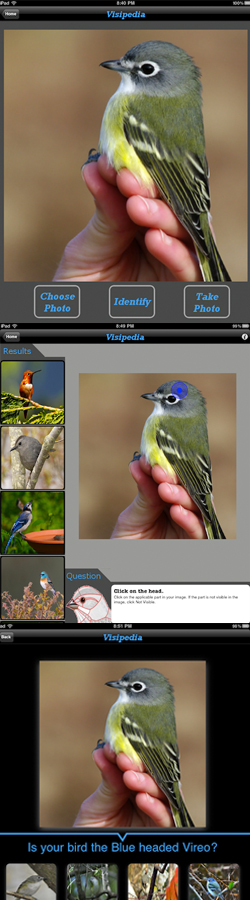

The Visipedia app asks questions to help the user identify bird species. From top to bottom:

- User submits image

- App asks user to tap on bird's head

- App uses that information to correctly identify the bird's species

The Cornell Lab of Ornithology is contributing its database of images showing the male, female and juveniles of more than 500 North American species. Citizen scientists working with the laboratory will label the images and expand the database further. The ultimate goal is to have 100 images identified by experts for each species.

“We are helping Visipedia reach an audience of birders who are hungry for this kind of technology,” said Jessie Barry, a researcher at the Cornell Lab of Ornithology.

The hope is that the app will strengthen the users’ connection to the natural world and their desire to preserve natural habitats. Barry and her team are recruiting citizen scientists through the lab’s website, allaboutbirds.org, which has more than one million unique visitors a month. When researchers asked birders to help label birds’ colors for Visipedia earlier this year, they received one answer a minute, on average. Birders are eager to help train the Visipedia system, Barry said, because they know it will ultimately become a tool that will help them in the field.

Belongie hopes the app will ignite a movement among other enthusiasts, who will partner with computer scientists to create similar apps for everything from flowers, to butterflies, to lichens and mushrooms.

“We can’t solve all the challenges by ourselves, but we believe our framework can,” Belongie said.

How the app works: the user experience

Users upload their pictures to an iPad equipped with the Visipedia app. The app’s algorithms process the picture and display a series of results deemed a good match. Sometimes the app is spot on. But sometimes, it requires some additional help from the user.

To find a more accurate result, the app will ask the user to identify a specific part of the bird in the original picture, such as the head, the tail or the wing. Sometimes, the app also will ask what colors can be found on that part of the bird. Based on the user’s answers, the app generates more results, until it finds the correct answer.

This method is similar to a game of 20 questions, played between the system and the user. But the system analyzes the image first to minimize the number of questions a user has to answer. Typically, the app takes about five to six questions to get it right, Belongie said. The goal is to fine-tune it until the right answer pops up after only two to three questions.

The app also allows the user to get additional information about the bird species from Wikipedia and Google. Users can also see additional pictures on Flickr.

Under the hood

Computer vision searches involve two levels of processing. Entry-level categories identify the kind of image the user is looking for, such as a bird, a car, a flower and so on. For Visipedia, that category is already preset, since the user is exclusively searching for birds.

The second level of processing is known as fine-grained categories. Each category is defined by its parts and attributes. For birds that would be the head, wings, tail, and so on, and their color and shape, for example. The app generates a heat map that helps the system determine which areas of the picture are likely bird parts. Each part and attribute are stored in the app’s code as a long list of numbers, called vectors. As more users work with the app, they train the app’s detectors to become more accurate. The process should yield some additional benefits, Belongie said. For example, code designed to recognize butterflies could also be helpful for moths.

The app’s user interface was designed by computer science undergraduate student Grant Van Horn. Its search system is the work of computer science graduate students Catherine Wah and Steven Branson in the Department of Computer Science and Engineering at the Jacobs School. Belongie has applied for a grant that would allow him to hire a developer, who could package the app’s code for other tablets and smart phones and maintain it. That’s what it will take to get it in the App Store, the researcher explained. Meanwhile, a demo version of the app is available upon request via http://visipedia.org.

Share This:

You May Also Like

Stay in the Know

Keep up with all the latest from UC San Diego. Subscribe to the newsletter today.